Supreme Court Bans the Use of Facial Recognition Technology by Police Without Consent

In a landmark ruling that underscores the evolving relationship between technology, civil liberties, and constitutional protections, the Supreme Court of India has declared that the use of facial recognition technology (FRT) by law enforcement authorities without explicit consent or a judicial warrant is unconstitutional.

This verdict not only reaffirms the citizen's fundamental right to privacy under Article 21 of the Indian Constitution but also raises pivotal questions about the unchecked use of artificial intelligence by state agencies in a democratic society.

Background: Rise of AI Surveillance in Law Enforcement

Over the past decade, Indian police departments, particularly in metropolitan cities, have increasingly turned to AI-powered facial recognition tools as part of surveillance and crowd monitoring measures. These tools use biometric markers—such as facial features—matched against databases to identify individuals in real-time.

However, this surge in surveillance has prompted criticism from legal experts, civil rights organizations, and citizens alike. Complaints have emerged regarding:

Warrantless biometric scanning of individuals in public spaces like train stations and protests.

Lack of legislative oversight on how such data is collected, stored, or shared.

Disproportionate false positives among individuals from marginalized communities, often leading to harassment or wrongful detentions.

These concerns culminated in public interest litigations (PILs) filed before various High Courts and eventually escalated to the Supreme Court, seeking a judicial interpretation of the limits on FRT deployment.

Key Reasons Behind the Ban

The apex court considered the following critical factors in its ruling:

🔹 Violation of Individual Autonomy and Consent

The Court held that scanning the faces of individuals in public spaces without their free, informed, and prior consent amounts to a breach of bodily autonomy and dignity—a core component of the right to privacy affirmed in Justice K.S. Puttaswamy v. Union of India (2017).

🔹 Risk of Arbitrary Detentions

Evidence was presented highlighting numerous algorithmic inaccuracies, including instances where individuals were wrongfully flagged and questioned by law enforcement. Studies have shown that FRT systems tend to have higher error rates for people with darker skin tones, thus disproportionately affecting Scheduled Castes, Scheduled Tribes, and religious minorities.

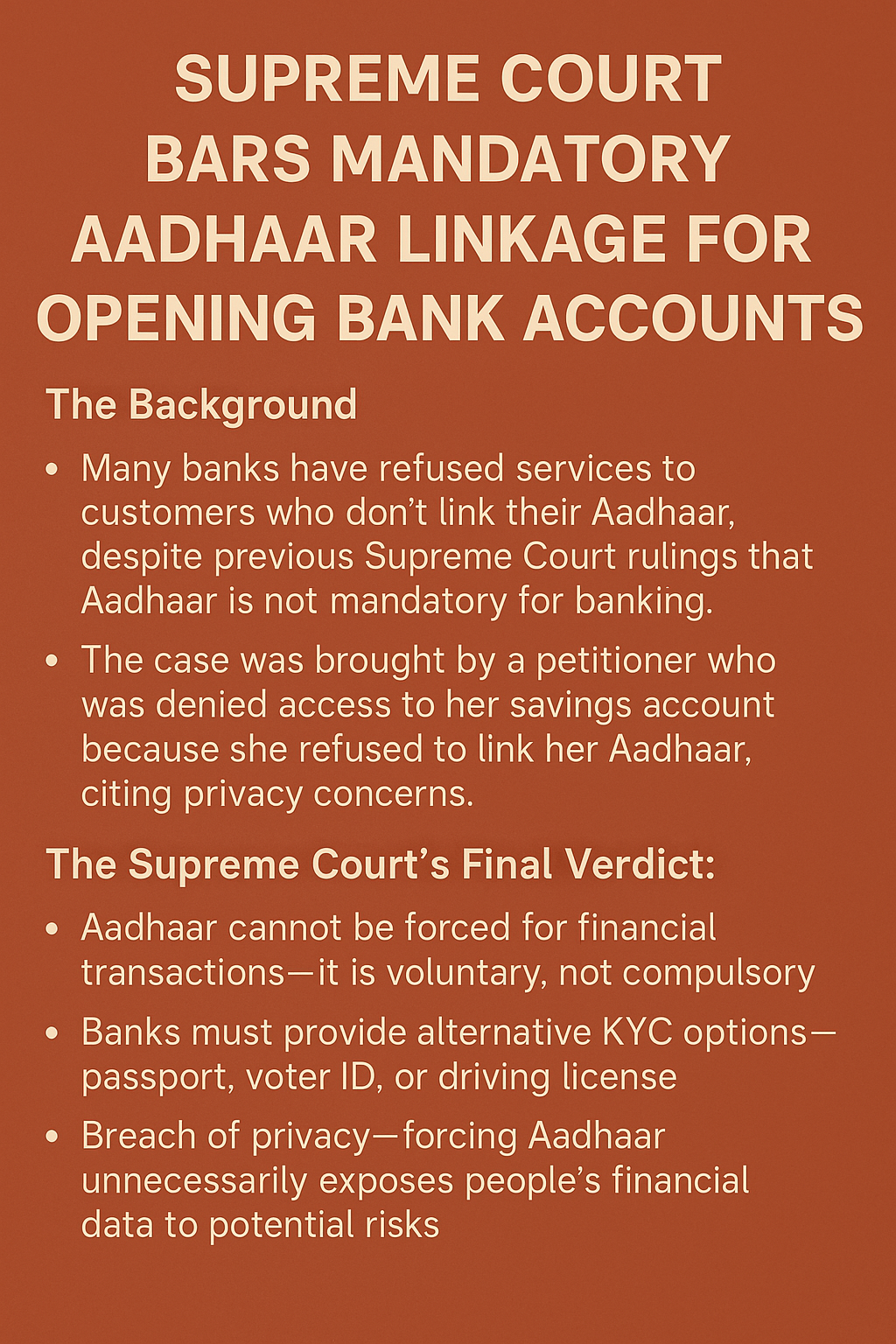

🔹 Absence of a Statutory Framework

The Court criticized the absence of dedicated data protection laws or a regulatory framework governing the use of FRT. Unlike jurisdictions such as the EU (which follows GDPR) or parts of the United States, India lacks binding legislation on biometric data use and its potential misuse by public authorities.

Highlights of the Supreme Court Order

In a judgment with far-reaching implications for digital rights and AI governance, the Supreme Court directed the following:

✅ Use of FRT Restricted to Investigations with Judicial Oversight

Law enforcement agencies are now barred from deploying facial recognition software for general surveillance purposes. Use is permissible only when sanctioned by a judicial warrant in the context of a specific investigation.

✅ Mandatory Deletion of Biometric Data Post-Case Closure

The Court mandated that all facial recognition data collected for a specific case be deleted once the case concludes. Retention of biometric data beyond the necessary period will now require statutory justification.

✅ Parliamentary Oversight and Draft Legislation

The Court instructed the Union Government to draft comprehensive legislation that regulates the use of facial recognition and other AI-powered surveillance technologies, incorporating principles of necessity, proportionality, and accountability.

Implications for Indian Jurisprudence and Law Enforcement

This judgment is a watershed moment for India’s constitutional jurisprudence on surveillance and technological governance. It:

Reinforces the right to privacy as a check on digital surveillance.

Sets a global precedent by becoming one of the few constitutional courts to restrict FRT in the absence of a data protection law.

Establishes a judicial framework for the future regulation of emerging technologies under democratic scrutiny.

What’s Next?

The ball is now in the legislature's court. With the Digital Personal Data Protection Act, 2023 already in place, but silent on FRT, the government is expected to introduce supplementary rules or standalone legislation to bridge the regulatory vacuum. Civil society, legal scholars, and tech ethicists are likely to play an active role in shaping these policies.

For law students and practitioners, this judgment offers a fertile ground for research and debate on:

Constitutional limits of surveillance in the digital age

Judicial interpretation of AI ethics

Comparative constitutional law on biometric privacy

Regulatory design for emerging technologies

Conclusion

The Supreme Court’s decision to restrict the use of facial recognition technology marks a crucial step toward protecting civil liberties in the digital age. As India navigates the complexities of surveillance, national security, and privacy, this judgment sets a clear tone: technology must operate within the bounds of the Constitution, not above it.

comments